Audio Analysis & ML

When performing audio analysis for ML, we examine and transform data as digital signals. For this purpose, we use various technologies to understand sound, including state-of-the-art ML algorithms. This process has already gained enormous traction in several industries, such as entertainment, manufacturing, and healthcare. Following is a list of the most common uses.

Speech recognition – With NLP (Natural Language Processing), computers can recognise spoken words. Using voice commands, we can control PCs, smartphones, and other devices and dictate texts instead of manually entering them. Popular examples include Apple’s Siri, Alexa by Amazon, Google Assistant, and Cortana by Microsoft.

Voice recognition – A voice recognition system identifies people based on their distinctive voices rather than isolating individual words. Users can authenticate themselves using this approach, which finds applications in security systems. In the banking sector, for example, systems use biometrics to verify employees and customers.

Music recognition – Well-known apps like Shazam use music recognition to identify unknown songs. It is also possible to classify musical genres based on the audio analysis of music. For example, Spotify’s proprietary algorithm can group tracks into thousands of categories/genres.

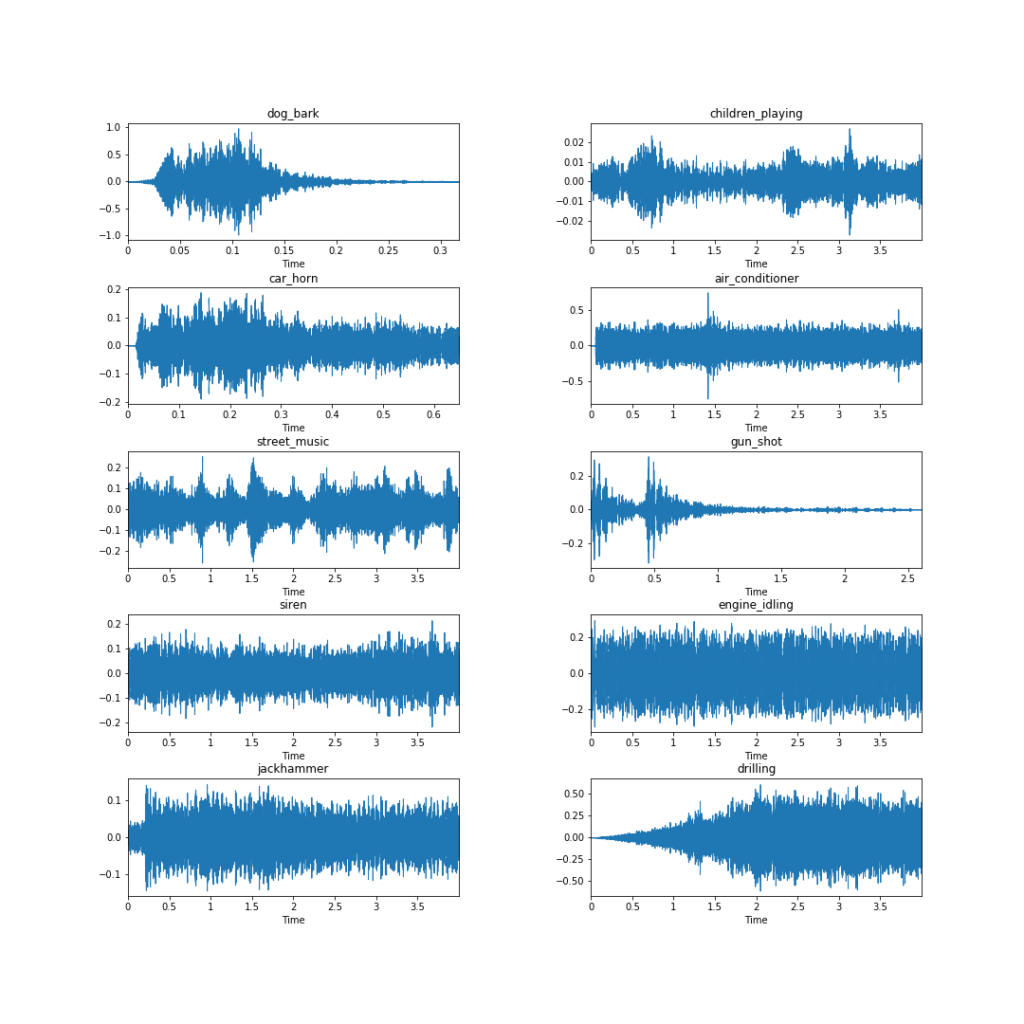

Environmental sound recognition – Identifying noises around us is the focus of environmental sound recognition. It promises a lot of advantages for the automotive/manufacturing industries, as well as for everyday life. IoT applications, for example, require a thorough understanding of the surroundings.

In case you fancy reading some well-written articles and tutorials on the above topics, here’s a small list:

- Audio Deep Learning Made Simple: Sound Classification, Step-by-Step (link);

- The Ultimate Guide To Speech Recognition With Python (link);

- Machine Learning on Sound and Audio data (link);

- How to apply machine learning and deep learning methods to audio analysis (link).

Details About Our Service

We can help you realise your ML project end-to-end, meaning that we will handle the following steps with the utmost care:

- Obtain the required data specific to your projects. We’ll also make sure that all data are stored in the required format;

- We will prepare your audio data by utilising appropriate tools and software. After all, the data need to be in the best possible shape for the ML model to be performant;

- We will extract features from your audio data for use with the ML model. Most of the time, visual representations are generated from audio data, and in turn, used to feed the model;

- Research and select the best-possible algorithm to supplement and train the model;

- Validate and test the effectiveness of the model on a clearly defined test set;

- Deploy the approved model. For this, we usually use GCP tools, but we can work with you to deploy to the cloud service provider of your choice;

- Monitor model performance over time (for a mutually agreed time span). Possibly gather fresh data down the road, and repeat the above steps to update the deployed model;

- The price will depend on the nature and length of the project. Please get in touch with us for more questions and to elaborate on your unique business case.

Finally, if you’re interested in looking behind the scenes or experimenting on your own, check out the following tutorial. You’ll learn how to build a Deep Audio Classification model with Tensorflow and Python. Enjoy!